I often get lost in the indices when dealing with differentiation wrt multi-dimensional quantities. So writing up some intuitions and identities that are useful. The cheatsheet from Imperial College Department of Computing is also quite useful (at the bottom of this post):

Gradient points in direction of steepest ascent

Consider a function or two variables f(x,y) which you can imagine plotting in a 3d graph (a surface with the values of f on the z axis).

The gradient of f is deinfed as nabla f = column vector partial df/dx, df/dy.

at a given point (x,y), if we ask how much would f change if we moved slightly in a direction v (v1, v2) then the answer would be v1 df/dx +v2 df/dy partial which is just the dot product [dot product in latex].

fixing the size of v this is maximised when v is parallel to nabla f. Geometrically we can easily see that this is maximised when you point orthogonal to the current contour (going directly to the next contour). So nabla f points in direction of steepest ascent

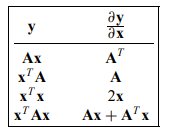

Matrix calculus identities

The below are a set of useful identities where I have found that using the einstein summation notation (summing over repeated indices) is a good way to derive them quickly.

eg to prove that $$\frac{\partial}{\partial \mathbf{x}} \left( \mathbf{x}^\top A \mathbf{x} \right) = A\mathbf{x} + A^\top \mathbf{x}$$

Let $$y = \mathbf{x}^\top A \mathbf{x} = x_i A_{ij} x_j$$

Then

\begin{align}

\frac{\partial y}{\partial x_k} &= \frac{\partial}{\partial x_k} \left( x_i A_{ij} x_j \right) \\

&= \left( \frac{\partial x_i}{\partial x_k} \right) A_{ij} x_j + x_i A_{ij} \left( \frac{\partial x_j}{\partial x_k} \right) \\

&= \delta_{ik} A_{ij} x_j + x_i A_{ij} \delta_{jk} \\

&= A_{kj} x_j + x_i A_{ik} \\

&= (A \mathbf{x})_k + (A^\top \mathbf{x})_k \\

\end{align}

ie we have $$\frac{\partial}{\partial \mathbf{x}} \left( \mathbf{x}^\top A \mathbf{x} \right) = A \mathbf{x} + A^\top \mathbf{x}$$

Pingback: Linear Regression – Maths with Ronak

Pingback: Lagrange multipliers and optimisation Intuition – Maths with Ronak